Fake News and Misinformation: How digital tech can help

This article applies design thinking and the innovation mode framing to one of our era's most pressing challenges. Using design thinking principles and the "How Might We" framework, we explore a technology-driven solution that combines AI capabilities with human judgment. The approach demonstrates how complex societal problems can be addressed through systematic innovation thinking.

How Might We use the latest digital technologies to tackle Fake News?

Fake news and misinformation is a major problem in the modern, connected world. Although various forms of propaganda have been around for ages, digital ‘fake news’ is becoming a major threat, partly due to the ease of creating, diffusing, and consuming content.

False or misleading stories can be easily created and diffused via the global online networks - in a matter of a few clicks. Fake stories become part of the personalized, endless feeds that people consume on a daily basis. And what makes the problem worse is that a significant percentage of the ‘social media users’ tend to consume content easily and share mechanically without the required critical thinking.

But even when critical thinking is there, it is not always easy to spot a fake story: with the so-called ‘deep fakes’, it is already extremely difficult to tell if what you see is true or not. The latest technologies enable hacking real videos or creating artificial ones that present people doing things they never did — in a very realistic way. Moreover, synthesized speech that matches the voice of a known person can be used to claim statements or words never said.

The times when something was perceived as true just because it was ‘seen on TV’ or in a photo or in a video, are gone.

Social media, online users, websites, blogs are all part of the problem to some extent. With the current digital setup, it is possible to design waves of Fake News that influence or even shape the public opinion and (re)set global and local agendas. Fake stories are engineered to become viral: when distributed via social platforms they eventually get ‘promoted’ by regular users, at scale — both intentionally and unintentionally. Unintentional ‘promotion’ happens due to a generalized lack of awareness: people do not realize how often they are exposed to Fake News; they don’t know if they are influenced by misleading content; they cannot realize that they may be part of the problem itself by unintentionally promoting Fake News and influencing others.

People usually don’t realize that they may be part of the problem of Fake News by unintentionally promoting fancy false stories and influencing others.

Read also: What’s next for Artificial Intelligence

Why is ‘Fake News’ a hard problem to solve?

What makes Fake News an extremely hard problem to solve is the difficulty in identifying, tracking, and controlling unreliable content. A good solution would require advanced digital technologies and protocols for assessing content (e.g. to efficiently spot and fact-check the less reliable content). It is also very difficult to raise awareness about the problem among social media users - as this would take time and it would require a huge amount of resources. For instance, raising awareness about the fake news problem would require a global, ongoing program that ‘educates’ online users to apply critical thinking when consuming and sharing digital content.

There is also difficulty in handling probable Fake Stories: even when there is early evidence that a Fake Story is being circulated online, there is not much to do until there is certainty about it. Otherwise, e.g. removing a story or preventing people from sharing it based on early signals, could be perceived as an attempt of intervention and censorship.

The problem itself is well-understood and there are ongoing efforts within news corporations and social media companies to mitigate it. And, while some of these efforts may prove to be somehow effective, the fake news problem proves to be bigger and more complicated — it goes beyond the boundaries of social media and news companies: it is primarily a social problem.

On the other hand, Fake content is designed to be viral; its creators want it to spread organically and rapidly. Fake stories are engineered to attract attention and trigger emotional reactions so users are tempted to share the ‘news’ with like-minded people in their social networks. With the right tricks and timing, a false story can go viral in hours. More specifically, the ‘fake news industry’ takes advantage of the following ‘flaws’ or our online reality:

1. The global system values ‘Content performance’, not ‘Content Quality’

The performance of the global ‘news distribution network’ - including social media, news corporations, opinion leaders, and influencers - is usually measured in terms of ‘attention’ and ‘user engagement’. In many cases ‘content performance’ is based on CTR — Click Through Rate — along with user engagement and social sharing statistics:

An article with high CTR will probably make it to the top of social feeds or news websites — regardless of how informative, trustworthy, or useful it is.

With this definition of success and performance, digital content with fancy photos and ‘overpromising titles’ can easily perform well — regardless of the quality of the underlying story (if there is one). An attractive ‘promo card’ for an article with an impressive title is usually enough for people to start sharing with their friends and networks — a behavior that can trigger viral effects for content with no substance — or even worse- with false information and misleading messages.

Content quality is rarely part of these KPIs — at least not among the important ones. Instead, it is the predicted performance of the content that is often most important for news and social media companies: websites and other online entities rush to reproduce stories that appear to be potentially viral; and they promote them so they get more traffic and serve more ads, to achieve their ambitious monetization goals.

2. Online users tend to ‘share a lot, easily’

Another aspect of the problem is this massive group of online users who act primarily as distributors/ re-sharers of content — without having the necessary understanding or even a genuine interest in what they share. It is sad to realize that in an era characterized by instant access to the world’s accumulated knowledge, the majority of the online users are ‘passive re-sharers’: they don’t create original content; they just recycle whatever appears to be trendy or likable, with little or no judgment and critical thinking. Users of this class may consume and circulate fake news — and other types of poor content — and unintentionally become part of the fake news distribution mechanism.

— Read also: Is Artificial Intelligence a Threat?

The basis of a solution: a system to raise awareness

Obviously, there are entities that intentionally drive fake news — e.g. to achieve certain political, commercial, or other goals. As mentioned above, there is also a massive group of online users who unintentionally participate in the exponential spread of false stories. In fact, due to a lack of understanding and awareness, many users will probably never realize that they are part of the ‘fake news distribution mechanism’.

Raising the global awareness around Fake News should be a key objective in every serious attempt to tackle the problem - we must establish an ongoing measurement and communication of (at least) the following two aspects:

The circulation of fake news across the globe - on top of social media platforms and news sites - its estimated reach and impact

The degree of unintentional participation of media companies, social platforms, and online users

Furthermore, we must understand the underlying patterns to make the identification of fake news easier and more reliable, as early as possible in their ‘growth’ journey - and this knowledge should become available to all involved parties - also via special APIs that provide rules to programmatically estimate the probability of a given story being unreliable.

These could be achieved through an ongoing analysis of representative samples of the world’s digital content.

A hybrid of the latest digital technologies (e.g. natural language processing and advanced content understanding systems) along with a global network of humans - independent reviewers - that continuously analyze, score and label content into an immutable, unified, global content store.

This content store would provide a solid basis for monitoring the phenomenon, measuring the impact of potential solutions, and, most importantly, quantifying the participation of every major news corporation and social media platform in the problem of Fake News - and let the world know.

— Read also: What is the future of work?

The solution: A global registry of labeled Fake News

The proposed ‘Fake News Evaluation Network’ focuses less on the real-time classification of new content and more on a retrospective, large-scale ‘fake news’ analysis with the intent to quantify the problem, extract patterns, and share the derived knowledge with all the involved parties. This solution puts emphasis on measuring the level of responsibility of each of the involved stakeholders - news providers, publishers, or social media platforms - in an attempt to educate, raise global awareness, and influence the ‘corporate social responsibility’ strategies of online media companies.

The objective is to quantify the problem and raise global awareness by systematically taking snapshots of content that is assessed and labeled by humans.

Imagine a ‘content sampling’ process running on a daily basis — sampling the global content publishing and sharing activity. Powered by special crawlers and advanced NLP technologies, this process ‘listens’ for stories and ‘news’ across a representative set of major websites, social media, and popular blogs. It discovers and organizes ‘fresh content’ and ‘new content references’ into a unified, deduplicated, and immutable content store — specially designed to handle stories, facts, and their associations.

Newly identified content is unified and linked to its ‘master copy’, other related ‘stories’, and any relevant factual information and metadata. It is then compared against the already labeled content, with the objective to estimate the ‘degree of deviation from reality’ using ‘fact-checked versions of the same story’ and known patterns.

Furthermore, the platform can offer APIs to expose the patterns and knowledge extracted from the ongoing analysis of content in order to enable 3rd parties to predict the trustworthiness of new content — at ‘publish time’ or ‘share time’.

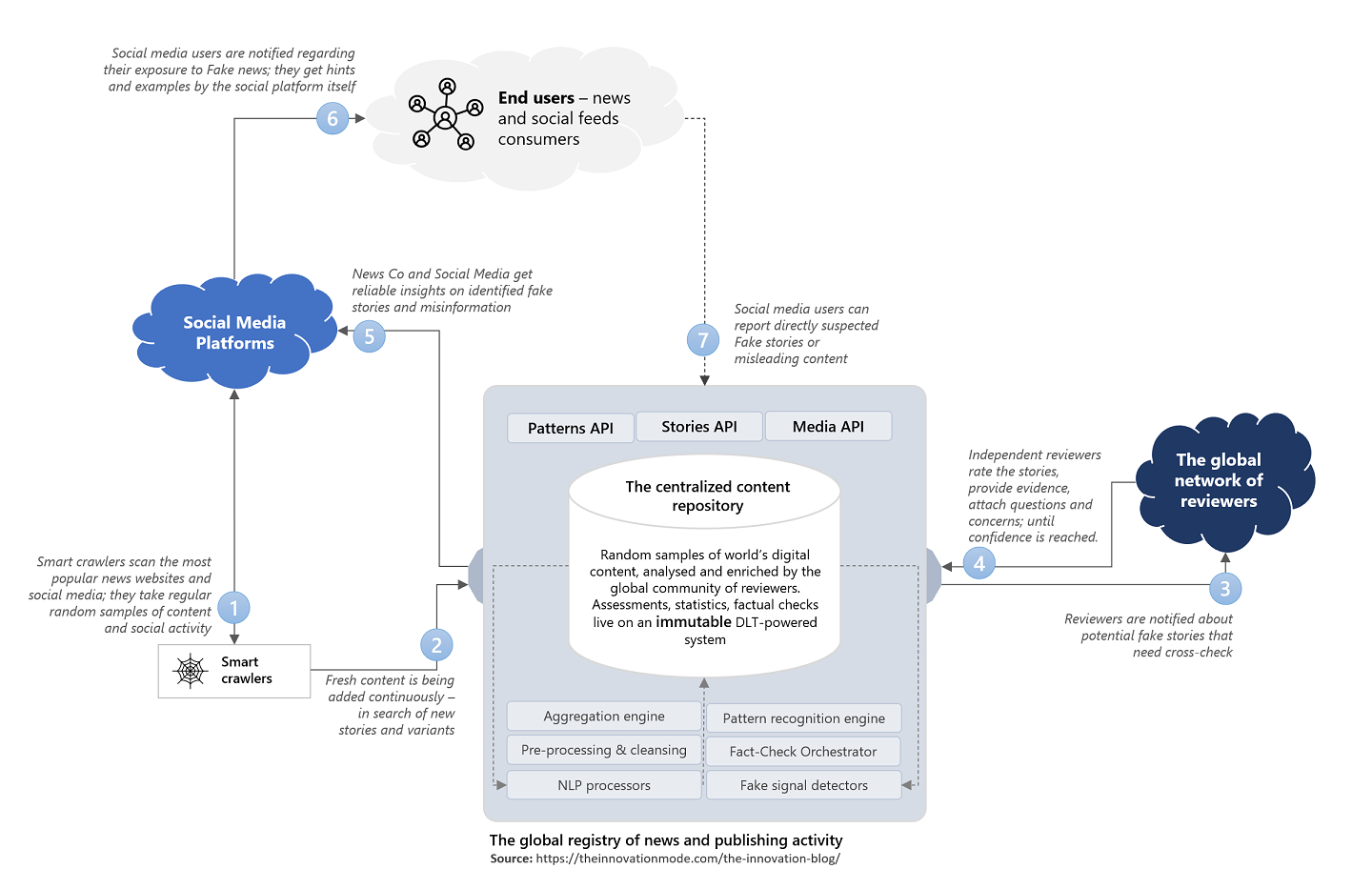

A high-level overview of the described Fake News solution - powered by AI and a network of independent reviewers

Artificial Intelligence adds significant value by enabling the identification of the ‘core story’ in the content (the key elements of a story e.g. named entities, the events, the occasion, the timeline, etc.) and finding other variations in the consolidated pool of content. AI agents would be able to find cross-posts and newer versions of the same story, along with references, user discussion threads, complaints - coming from different sources, in various languages, and levels of quality.

— Read also: How Artificial Intelligence Disrupts Employment and the Workforce - the Types of Jobs to be Impacted.

The ‘fact-checking orchestrator’ - as seen in the above chart - synthesizes the lists of stories that need to be evaluated by the global network of reviewers. This component simplifies the assessment process by making intelligent suggestions and recommendations to the reviewers - e.g. the stories that must be checked and what needs to be checked within each story. The global community of professionals and active ‘digital citizens of the world’ discover, evaluate, and vote for/against certain aspects of the story — with proper justification, inline references, and annotations.

As soon as a story gets enough confidence - votes and factual checks - AI generalizes the findings to all known variations of the story and different types of coverage — allowing quantification of the reliability of both the core story and its variants. AI components pick the patterns and keep monitoring each story for new facts and events that need to be checked. All these as part of the immutable content store — labeled content, assessments, publisher scores, and metadata permanently stored as part of global history — no deletions, no ‘phantom’ fake news.

Social Media, news corporations, blogs, and other entities consume the APIs of this platform to self-assess their compliance and progress towards a “better content for the world” mission. As content is being evaluated in terms of trustworthiness, the reliability of those who produce it, promote it, or distribute it is also reflected:

A ‘publisher evaluation system’ quantifies how website A or social media B or news corporation C is part of the global fake news problem.

Having this information, social media companies can take action, learn, and measure the level of their responsibility in spreading fake stories. They can let their users know that certain stories they have shared, proved to be false and misleading. They can help the global effort by educating their users and demonstrating real social responsibility and meaningful actions towards a better-informed society.

Social media must help also by educating their users and demonstrating real social responsibility, with meaningful actions towards a better-informed society.

Companies could also consume special APIs in order to cross-check content at ‘share time’ and notify their users if the content is already flagged or there are signals for limited trustworthiness (while leaving the sharing decision to the user). But also retrospectively, social platforms could notify users who have already engaged with ‘verified fake stories’ (e.g. they have liked, shared, saved, commented on, or simply consumed) and explain to them how to avoid such content in the future.

There are countless interesting use cases — including measurements of additional aspects of content quality, global trend analysis, and articulation of the dynamics of the phenomenon. The solution could be based on unified content on top of IPFS or Swarm — an immutable system hosting samples of the world’s content, unified, labeled, and scored in terms of trustworthiness and other qualities of digital content.

A platform powered by genuine human collaboration spirit assisted by our most advanced and intelligent digital technologies.

2025 Update: Since this framework was first published, large language models have dramatically increased both the threat of AI-generated misinformation AND the potential for AI-powered detection. The core architecture — human-AI collaboration with immutable verification — remains the most promising approach and has influenced platforms like Community Notes.

The emergence of generative AI makes this framework more relevant than ever: as synthetic content becomes indistinguishable from authentic content, systematic verification infrastructure becomes essential.

For our latest thinking on AI applications and strategy, see our AI Strategy Advisory.

Frequently Asked Questions About Fake News

Why is fake news so hard to stop?

Fake news persists because the global content system rewards performance (clicks, shares, engagement) over quality and accuracy. Additionally, users share content easily without verification, deep fakes make visual evidence unreliable, and early intervention risks being perceived as censorship. Solving the problem requires addressing all of these factors simultaneously through systematic innovation.

Can AI detect fake news?

AI can assist in fake news detection by identifying story patterns, finding content variations across sources, and flagging suspicious content for human review. However, AI alone cannot reliably determine truth — context, intent, and nuance require human judgment. The most effective approach combines AI capabilities (speed, scale, pattern recognition) with human fact-checkers (judgment, verification, context). This human-AI collaboration model is central to the framework described above.

What can social media companies do about misinformation?

Social media platforms can combat misinformation by: implementing content verification APIs to check stories at share-time, notifying users who engaged with subsequently-debunked content, publishing transparency reports on their misinformation metrics, and demonstrating corporate social responsibility through measurable action. The framework above provides APIs and scoring systems that enable all of these capabilities.

How does blockchain help fight fake news?

Blockchain and distributed systems (like IPFS) provide immutability — once content is labeled and scored, that assessment becomes permanent and tamper-proof. This prevents "phantom fake news" where debunked stories disappear and resurface. An immutable content store creates accountability and enables longitudinal tracking of how misinformation spreads and evolves.

---

Solving Complex Problems Through Innovation

This framework for solving misinformation with advanced technologies demonstrates how Innovation Mode approaches complex challenges: identify systemic root causes, design for scale, combine human expertise with AI capabilities, and create measurable outcomes.

We apply this same methodology to help organizations tackle their most difficult problems — whether in product development, AI strategy, or social impact. Interested in this approach?

→ Explore Social Innovation Advisory

How could innovation help us mitigate and handle climate change? What are the notable efforts in this direction? Dragana Vukasinovic, Anthony Mills, Daniel Burrus, David Blake, Michael Stephen Crickmore, and Christos Dimas share their views.

Check also our unique Innovation Toolkit - a collection of seven innovation templates that empower teams to frame problems, shape ideas, run hackathons, and more.